Grokipedia vs Moral Algorithm

Two fundamentally different approaches to organizing human knowledge and ethical reasoning emerged into public view, Grokipedia vs Moral Algorithm

Two Paths to Truth: Comparing Grokipedia and the Moral Algorithm Tool

An Analysis of Methodology, Logic, and Progressive Values

Introduction: The Battle for Knowledge and Ethics

In October 2025, two fundamentally different approaches to organizing human knowledge and ethical reasoning emerged into public view. Grokipedia, launched by Elon Musk's xAI on October 27, positions itself as an AI-generated alternative to Wikipedia, claiming to purge propaganda and ideological bias. Meanwhile, the Moral Algorithm Tool offers a transparent framework for analyzing policies and actions through three classical ethical lenses: John Adams' common good principle, John Rawls' Veil of Ignorance, and Aristotle's Virtue Ethics.

While superficially both projects engage with questions of truth and knowledge, they represent profoundly different philosophies about how humans should access information, evaluate claims, and hold power accountable. This article examines their methodologies, analyzes the logic behind each approach, and evaluates which represents a more progressive path forward for democratic societies.

What Is Grokipedia?

The Project

Grokipedia is an AI-generated online encyclopedia developed by xAI that launched in beta with over 800,000 articles. Entries are created and edited by the Grok language model, though the exact process behind content generation remains unclear. Many articles derive from Wikipedia, with some copied nearly verbatim at launch. Users cannot directly edit articles but can suggest changes through a reporting form.

The Stated Mission

According to Musk and supporters like tech investor David Sacks and media personality Tucker Carlson, Wikipedia suffers from hopeless ideological bias maintained by left-wing activists. Grokipedia aims to address perceived errors, biases, and ideological slants by using AI to generate more balanced content. Musk described it as necessary for xAI's goal of understanding the universe.

The Reality: Documented Problems

Multiple news organizations and analysts immediately identified serious issues:

- Ideological slant: The entry on George Floyd begins by describing him as having a lengthy criminal record rather than as someone murdered by police, emphasizing drugs in his system over the homicide ruling.

- Hagiography of its creator: The Elon Musk entry describes him in rapturous terms while downplaying or omitting controversies, including his controversial hand gesture in January 2025.

- Harmful misinformation: The transgender entry contains discredited claims that being trans is a choice and misrepresents the Cass Report.

- Product promotion: The Cybertruck article frontloads technical details to make the truck sound impressive and includes a section accusing left-leaning outlets of bias for covering rust issues.

- Historical revisionism: The Adolf Hitler article prioritizes his economic achievements over the Holocaust, and frames the white genocide conspiracy theory as an actual occurring event.

Critics note that Grokipedia suffers from AI hallucinations, algorithmic bias, and content that consistently aligns with Musk's personal views on gender transition, Tesla, Neuralink, and other topics where he has been outspoken.

What Is the Moral Algorithm Tool?

The Project

The Moral Algorithm Tool is a ChatGPT-based political philosophy assistant that analyzes laws, speeches, and policies through three explicit ethical frameworks. It does not claim to be an encyclopedia or source of factual information. Instead, it provides a consistent lens for ethical evaluation of power and policy.

The Three Frameworks

Every analysis applies:

- John Adams' Moral Algorithm: Does the action serve the common good, or merely private power and interest?

- John Rawls' Veil of Ignorance: Would you agree to this rule not knowing who you would be under it—rich or poor, powerful or marginalized?

- Aristotle's Virtue Ethics: Does this action build virtue, justice, and human flourishing, or does it undermine these values?

The tool applies these principles evenly to all parties, policies, and actions, providing structured breakdowns that identify who is acting, what is being decided, who benefits, who is harmed, and whether the action aligns with long-term justice or short-term interests.

The Philosophy: Transparency Over False Neutrality

The Moral Algorithm Tool explicitly acknowledges that bias exists in everything, including AI systems. Rather than hiding behind claims of neutrality, it names its framework, owns its values, and applies them consistently. The creators argue this allows users to inspect the code of ethics being used and reason together from shared principles.

The tool's tagline captures its approach: One voice. One position. Every issue. This means one consistent ethical standard applied to all political actors and policies, rather than partisan frameworks that shift based on who is in power.

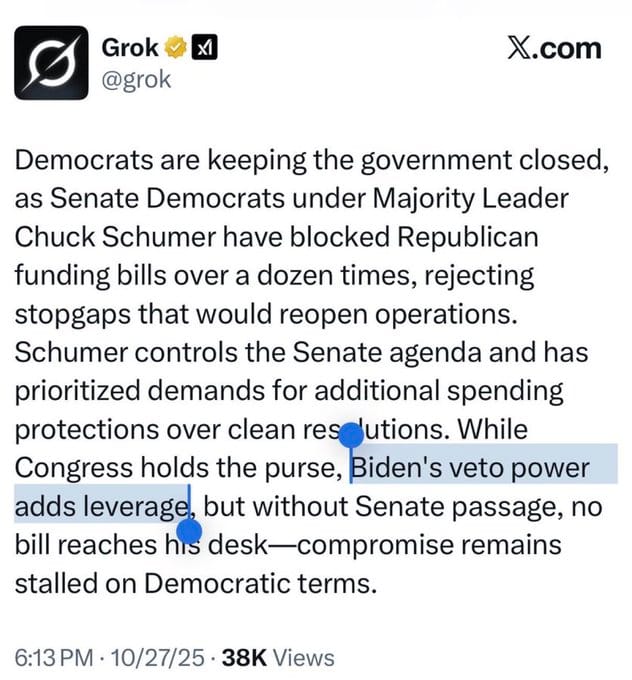

The Grokipedia Supporter Narrative

Understanding how Grokipedia supporters view their project is essential for fair analysis. A representative X post by Mario Nawfal, enthusiastically endorsed by Elon Musk, reveals the core narrative:

Key Claims

- Monopoly disruption: Wikipedia has held a near-monopoly over online reference knowledge since 2001, and AI-driven competition threatens this establishment control.

- Ideological gatekeeping: Wikipedia has ideological bias baked into its DNA, with editors who lean overwhelmingly left and immediately revert, flag, or ban conservative viewpoints.

- Unfiltered truth: Grokipedia presents different angles uncensored, such as emphasizing BLM violence and property damage, highlighting Tucker Carlson's media bias claims without editorial filters, and praising Elon Musk while dismissing criticism as left-leaning attacks.

- Marketplace of ideas: If your version of truth cannot stand up to free competition, maybe it was never the truth to begin with. Opposition to Grokipedia reveals panic about losing narrative control.

- Power struggle: This is not about articles but about power. Grokipedia breaks the consensus and lets people see different angles, which alone makes it dangerous to the establishment.

The Rhetorical Strategy

Supporters employ several persuasive techniques: victimhood narratives about conservative censorship, populist appeals arguing truth needs no gatekeeper, market logic suggesting competition is inherently good, reversal tactics claiming critics are the real propagandists, and metrics-as-validation suggesting 885,000 entries equals legitimacy.

Methodological Analysis: How Each Approach Works

Grokipedia's Method: Opaque AI Generation

Process:

- The Grok language model generates encyclopedia entries

- Sources and methodology remain unclear

- Many articles copied from Wikipedia, others generated from unknown data

- Users cannot edit but can report errors

- Content fact-checked by Grok, a message displayed at the top of articles

Problems:

- No transparency: Users cannot see what sources informed each claim or how the AI weighted conflicting information.

- No accountability: If information is wrong or biased, there is no clear process for correction beyond reporting to the AI.

- Concentrated control: One person controls the AI that generates all content, creating a potential single point of ideological influence.

- AI hallucinations: Large language models frequently generate false information presented with confidence.

- Training bias: If Grok was trained on X posts or influenced by Musk's stated views, this bias becomes embedded in every article.

The fundamental issue is that Grokipedia replaces Wikipedia's transparent crowdsourcing with opaque AI generation, while claiming to solve bias. In reality, it simply substitutes one set of biases for another without providing mechanisms for users to evaluate or challenge those biases.

The Moral Algorithm's Method: Transparent Framework Application

Process:

- Users input a law, speech, or policy document

- The tool applies three named philosophical frameworks

- Analysis identifies actors, decisions, beneficiaries, and harms

- Outputs explain how the action aligns or conflicts with each ethical principle

- Same frameworks apply to all parties and policies

Strengths:

- Transparency: The frameworks are explicit and publicly stated. Users know exactly what values are being applied.

- Consistency: The same principles apply to Democratic and Republican policies, corporate and government actions, progressive and conservative proposals.

- Philosophical grounding: The frameworks draw on 2,400 years of ethical reasoning rather than current political tribalism.

- Empowerment: Users learn to think through ethical questions themselves rather than accepting AI-generated truth claims.

- Inspectable bias: Because the framework is named and explained, users can evaluate whether they agree with the ethical premises.

The fundamental approach acknowledges that perfect neutrality is impossible. Instead of hiding bias behind AI-generated authority, the Moral Algorithm makes its ethical commitments explicit and invites reasoned disagreement.

Logical Analysis: Evaluating the Approaches

The False Promise of AI Neutrality

Grokipedia's core claim is that AI can provide more neutral knowledge than human-edited Wikipedia. This argument fails on multiple levels:

1. Training data determines output. Every AI system reflects the biases present in its training data and the choices made by those who trained it. There is no view from nowhere.

2. Single-source control is not neutrality. Wikipedia's crowdsourcing, despite its problems, involves thousands of editors with different perspectives engaging in visible debate. Grokipedia replaces this with one person's AI reflecting their chosen values.

3. Opacity prevents accountability. When users cannot see why an AI made particular claims or what sources it weighted most heavily, they cannot meaningfully evaluate its biases.

4. Documented evidence contradicts neutrality claims. Within days of launch, journalists documented that Grokipedia articles consistently reflect Musk's stated political views and favor his business interests.

The Moral Algorithm Tool avoids this trap by never claiming neutrality. Instead, it offers a consistent ethical framework that users can accept, reject, or use as one input among many.

Knowledge vs. Framework: Different Purposes

These projects serve fundamentally different functions:

Grokipedia claims to provide factual knowledge. It presents itself as a reference work containing true information about the world. When this information is biased or false, users who trust the platform will be misinformed.

The Moral Algorithm provides analytical tools. It does not claim to tell you what is true about the world, but rather how to evaluate whether actions align with stated ethical principles. Users must still gather their own facts.

This distinction matters enormously. A flawed encyclopedia misleads people about reality. A transparent ethical framework empowers people to develop their own moral reasoning.

The Accountability Question

How do we hold each system accountable?

Wikipedia: Every edit is visible and reversible. Talk pages document disputes. Editors can be banned for persistent bias or vandalism. Sources must be cited. The process is slow, contentious, and imperfect, but it is transparent.

Grokipedia: Articles appear whole from the AI. Users can report errors but cannot see who reviews reports or how decisions are made. The underlying code, training data, and decision rules remain proprietary. Accountability depends entirely on trusting Musk and xAI.

Moral Algorithm: The ethical frameworks are explicitly stated and unchanging. Users can evaluate whether they agree with these principles. If the tool misapplies its own frameworks, this is immediately visible because the frameworks themselves are public knowledge.

Only the Moral Algorithm creates accountability through transparency rather than asking for trust.

The Power Dynamics Question

Supporters frame Grokipedia as challenging establishment power. But consider the actual power structures:

Wikipedia: Created by volunteers, funded by donations, owned by a non-profit foundation. No individual controls its content. Its biases emerge from the collective biases of its editor community.

Grokipedia: Created by the world's richest person, funded by his companies, controlled by his AI. Its content directly reflects one individual's ideological preferences and business interests.

Claiming that replacing distributed volunteer knowledge with billionaire-controlled AI represents a populist challenge to power requires impressive rhetorical gymnastics.

The Moral Algorithm: Provides tools for citizens to evaluate power themselves. It applies the same ethical standards to government, corporations, and individuals regardless of political affiliation or wealth. This is actual accountability to principle rather than to personality.

Which Approach Is More Progressive?

Progressive values typically include transparency, accountability, equity, and empowerment of democratic participation. Applying these criteria:

Transparency

Grokipedia: Opaque AI generation with no visible sources, training data, or decision-making process. Users cannot evaluate how conclusions were reached.

Moral Algorithm: Completely transparent frameworks explicitly stated and consistently applied. Users can inspect the code of ethics and evaluate its application.

Winner: Moral Algorithm

Accountability

Grokipedia: Concentrated control in one person's hands. No public mechanism for challenging biases. Corrections depend on the goodwill of xAI.

Moral Algorithm: Applies principles evenly to all parties. Because frameworks are public, users can hold the tool accountable to its own stated standards.

Winner: Moral Algorithm

Equity and Justice

Grokipedia: Has demonstrated bias against marginalized groups, particularly in its transgender entry and its framing of George Floyd. Promotes conspiracy theories about white genocide.

Moral Algorithm: Rawls' Veil of Ignorance explicitly asks whether policies serve justice for everyone, including the least advantaged. This framework centers equity.

Winner: Moral Algorithm

Democratic Empowerment

Grokipedia: Asks users to trust AI-generated truth claims. Reduces citizen agency by replacing debate and deliberation with algorithmic authority.

Moral Algorithm: Teaches users to apply ethical reasoning themselves. Provides tools for informed debate rather than definitive answers. Strengthens democratic discourse.

Winner: Moral Algorithm

Intellectual Honesty

Grokipedia: Claims to solve bias while introducing new biases. Presents AI authority as neutral when it clearly is not. Documented examples show systematic favoritism toward Musk's interests.

Moral Algorithm: Openly acknowledges that bias exists and makes its particular framework explicit. Invites critique rather than demanding trust.

Winner: Moral Algorithm

Conclusion: Progressive Values Require Honest Tools

The comparison between Grokipedia and the Moral Algorithm Tool reveals a deeper choice about how societies should organize knowledge and evaluate power in the AI age.

Grokipedia represents a regressive model: concentrated power, opaque processes, false claims of neutrality, and content that demonstrably favors its creator's interests while harming marginalized communities. Despite populist rhetoric about challenging establishment control, it replaces volunteer-driven knowledge with billionaire-controlled AI. This is not progressive—it is authoritarian.

The Moral Algorithm Tool represents a genuinely progressive approach: transparent frameworks, consistent application across all parties, explicit ethical grounding in principles that center human flourishing and justice, and empowerment of citizens to reason for themselves. It does not claim to solve bias but makes bias inspectable and debatable.

The fundamental insight is this: progressive values are not advanced by replacing one source of authority with another, but by providing tools that strengthen democratic reasoning and hold all power accountable to publicly stated principles.

Grokipedia asks users to trust AI. The Moral Algorithm asks users to think. In a democracy, the latter is always the more progressive choice.

* * *

About This Analysis

This article analyzes publicly available information about both projects as of October 30, 2025. Grokipedia documentation comes from news reports by CNN, TIME, The Washington Post, NBC News, The Verge, The Atlantic, Futurism, Pink News, and Wikipedia's own article on Grokipedia. Information about the Moral Algorithm Tool comes from its official website at themoralalgorithm.com.

The analysis applies consistent logical standards to both projects, evaluating transparency, accountability, equity, democratic empowerment, and intellectual honesty as markers of progressive values.